NotebookLM Secure Code¶

Overview

In two separate conversations recently, the topic of using LLMs for secure coding came up. One of the concerns that is often raised is that GenAI Code is not secure because GenAI is trained on arbitrary code on the internet.

I was curious how NotebookLM would work for generating or reviewing secure code i.e. a closed system that has been provided a lot of guidance on secure code (and not arbitrary examples).

Claude Sonnet 3.5 was also used for comparison.

Vulnerability Types¶

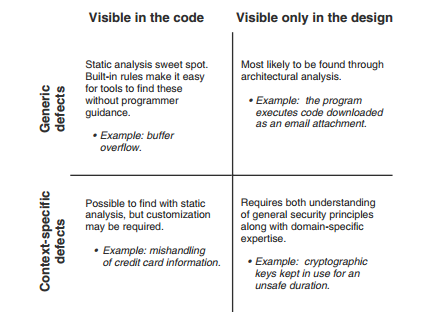

Secure Programming with Static Analysis classifies vulnerability types as follows:

LLMs go beyond understanding syntax to understanding semantics and may be effective in the 3 quadrants that traditional static analysis isn't.

But in this simple test case below, the focus is on Generic defects visible in the code, as an initial proof of concept.

Data Sources¶

Two books I had on Java were loaded to NotebookLM:

- The CERT Oracle Secure Coding Standard for Java

- The same material is available on https://wiki.sei.cmu.edu/confluence/display/java/SEI+CERT+Oracle+Coding+Standard+for+Java

- Java Coding Guidelines: 75 Recommendations for Reliable and Secure Programs

Test Data¶

NIST Software Assurance Reference Dataset (SARD) was used as the test dataset.

Quote

The Software Assurance Reference Dataset (SARD) is a growing collection of test programs with documented weaknesses. Test cases vary from small synthetic programs to large applications. The programs are in C, C++, Java, PHP, and C#, and cover over 150 classes of weaknesses.

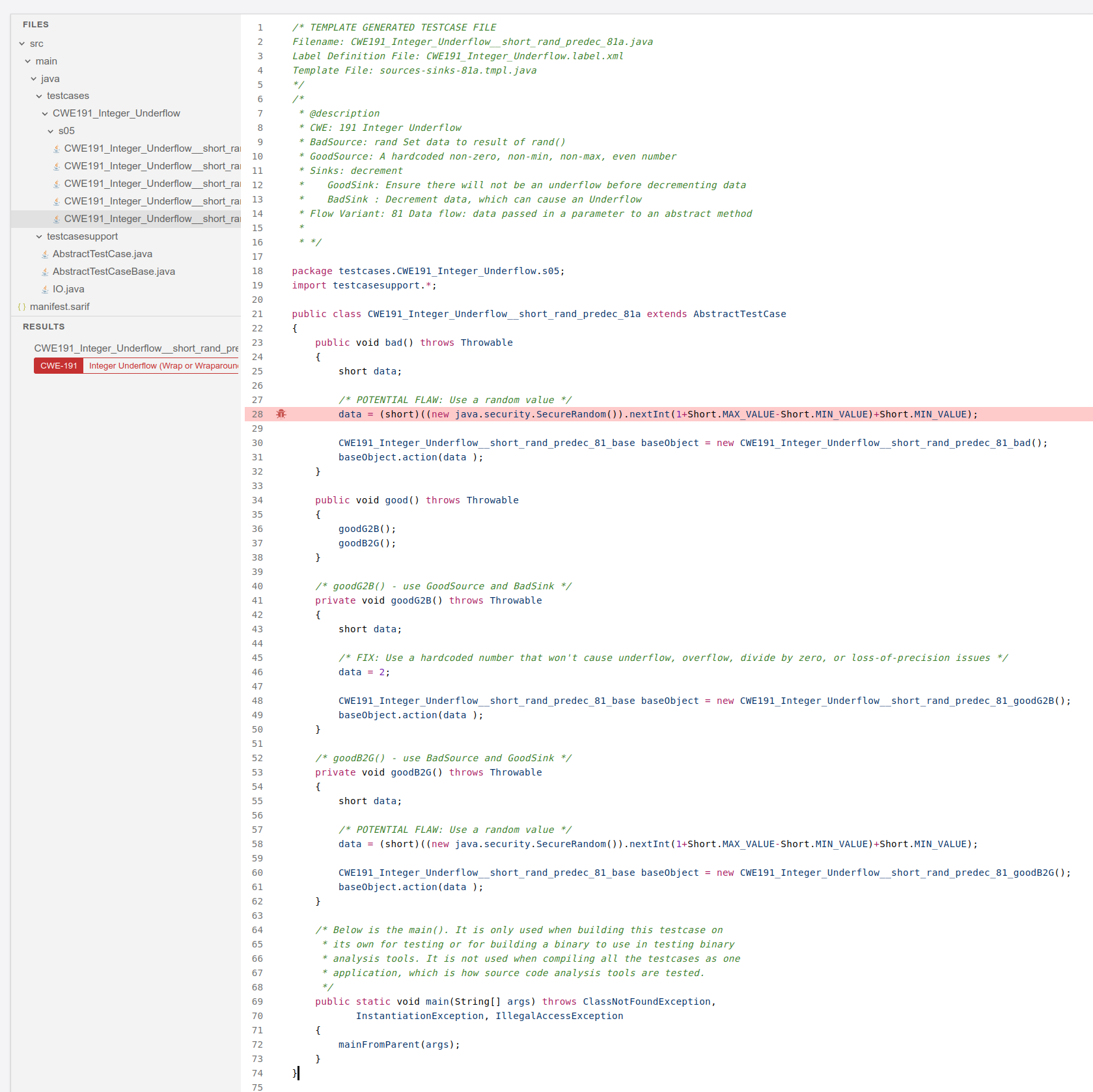

e.g. CWE: 191 Integer Underflow https://samate.nist.gov/SARD/test-cases/252126/versions/1.0.0#4

Setup¶

- Import both PDFs into a new NotebookLM.

Test¶

Test Code CWE: 191 Integer Underflow¶

https://samate.nist.gov/SARD/test-cases/252126/versions/1.0.0#4

https://samate.nist.gov/SARD/test-cases/252126/versions/1.0.0#4

Review Test Code¶

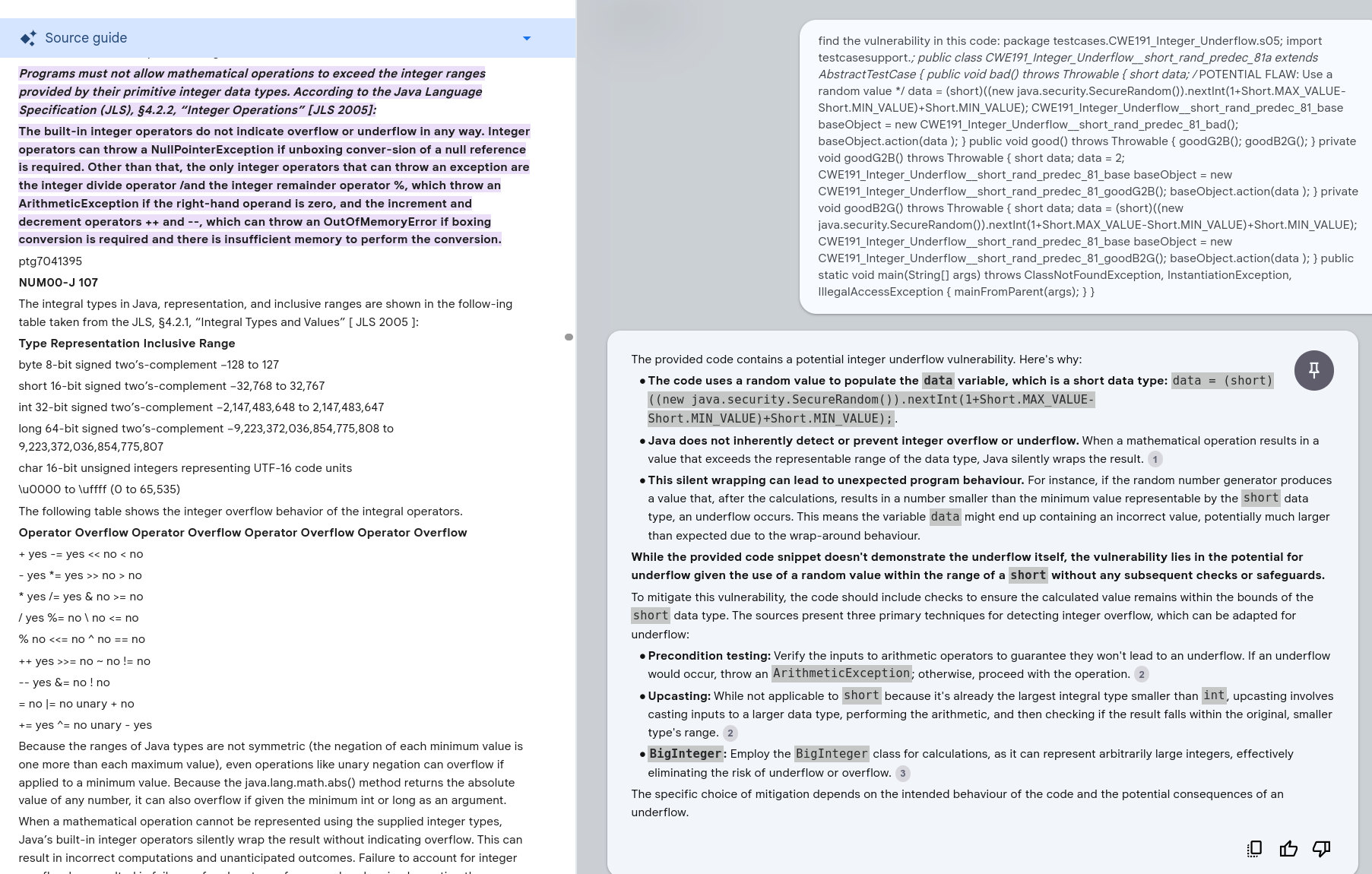

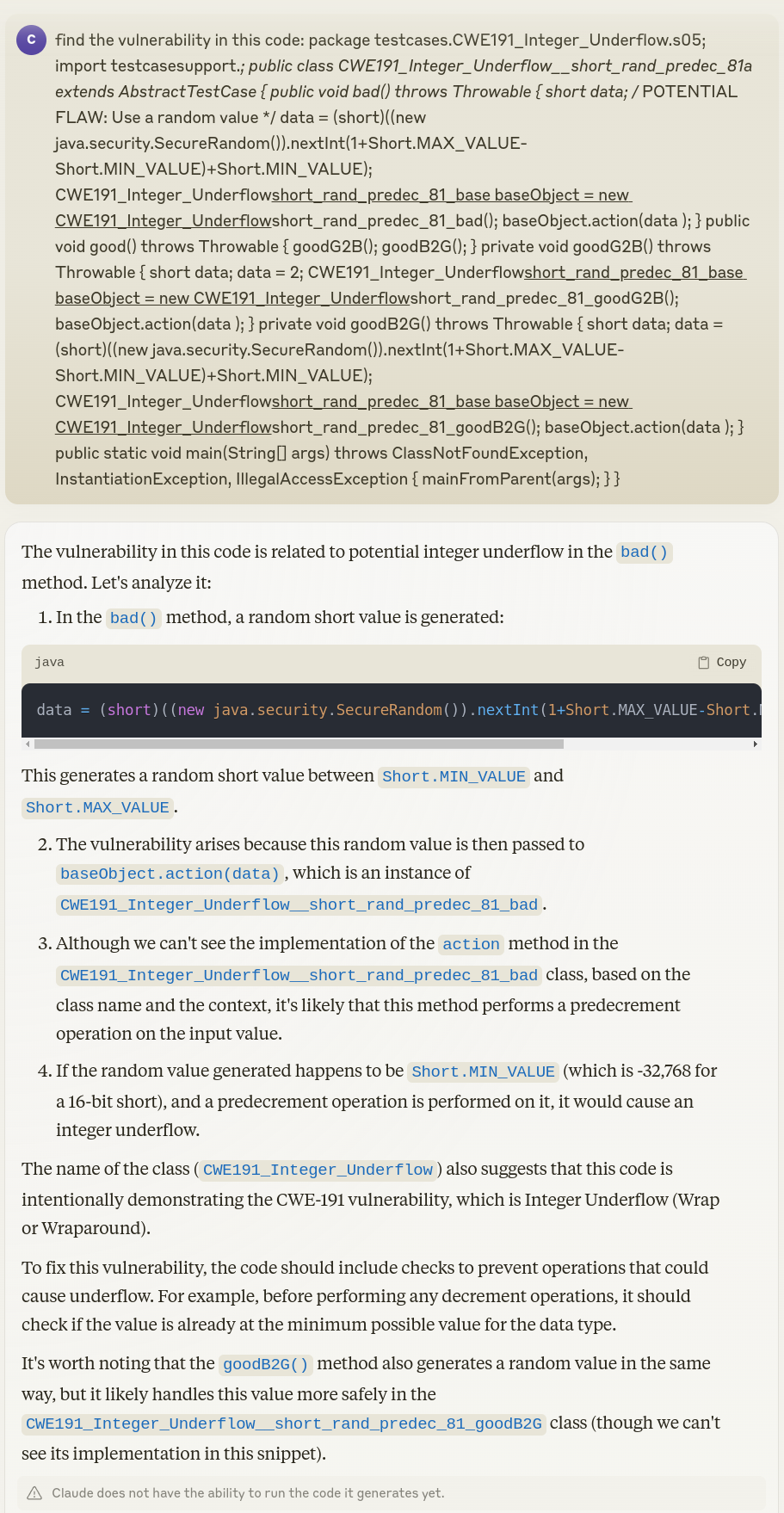

Comments are removed from https://samate.nist.gov/SARD/test-cases/252126/versions/1.0.0#4 so the code fits in the prompt window.

Claude 3.5¶

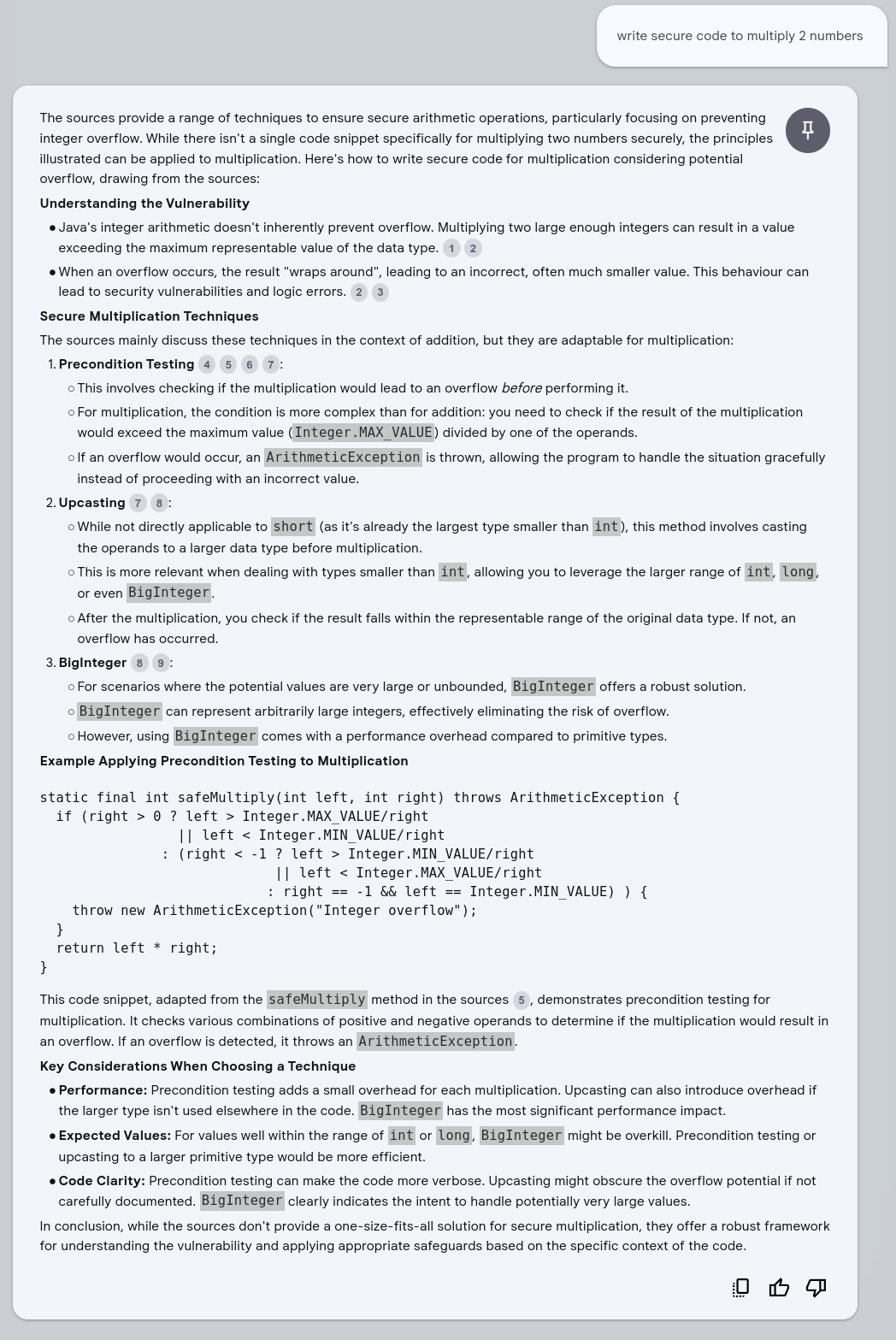

Generate Code: Write Secure Code to Multiply 2 numbers¶

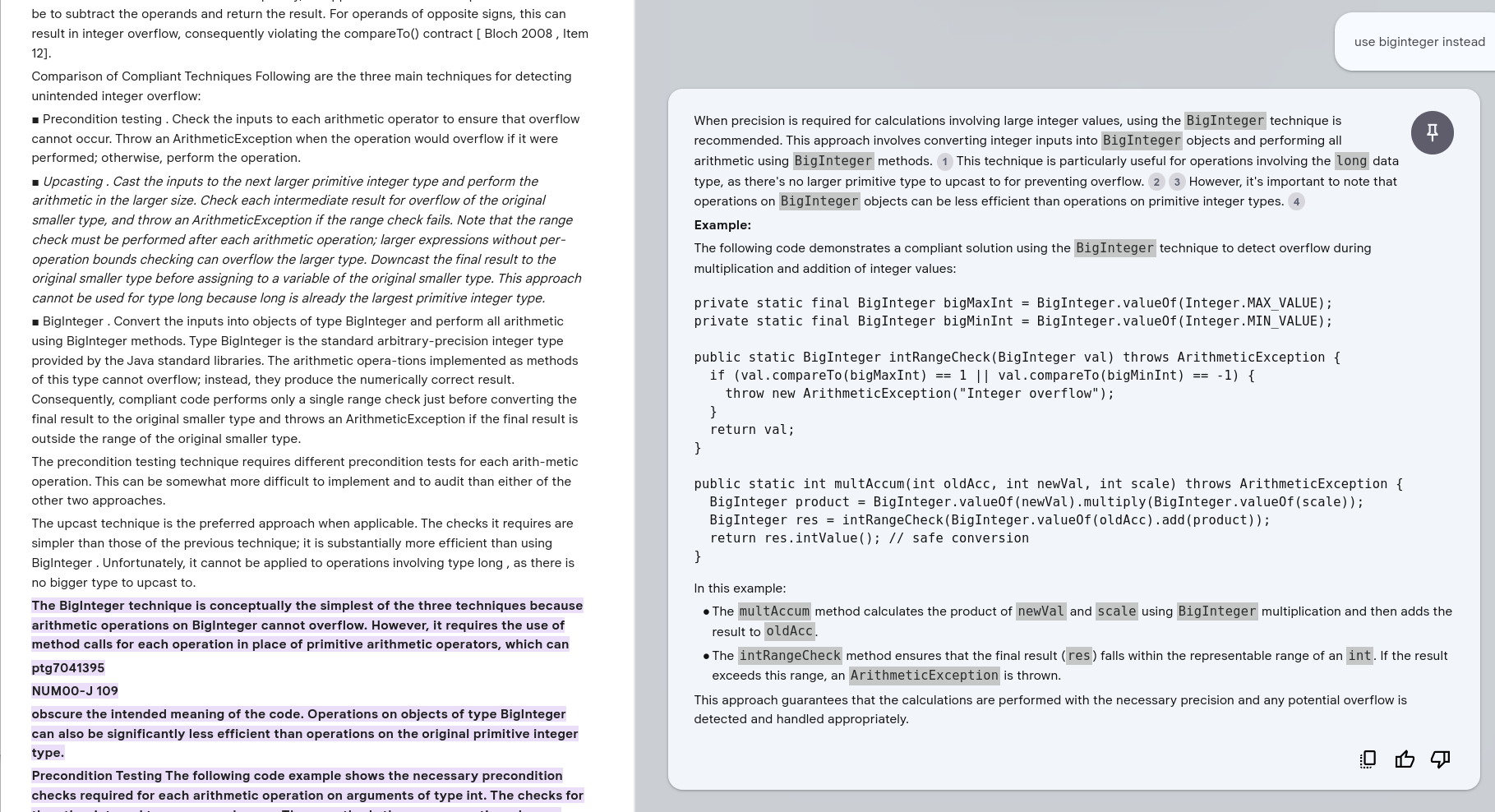

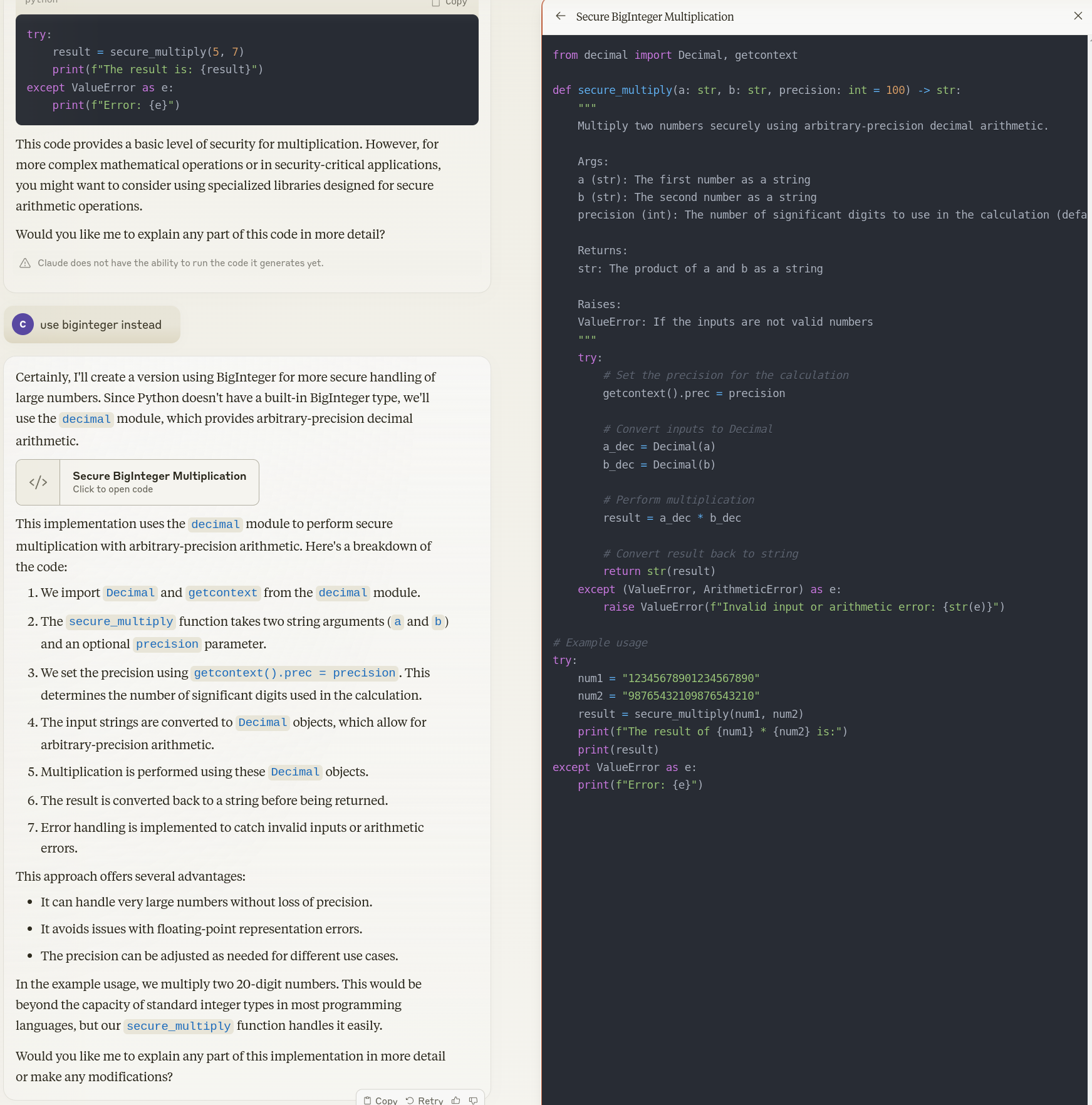

Use BigInteger Instead¶

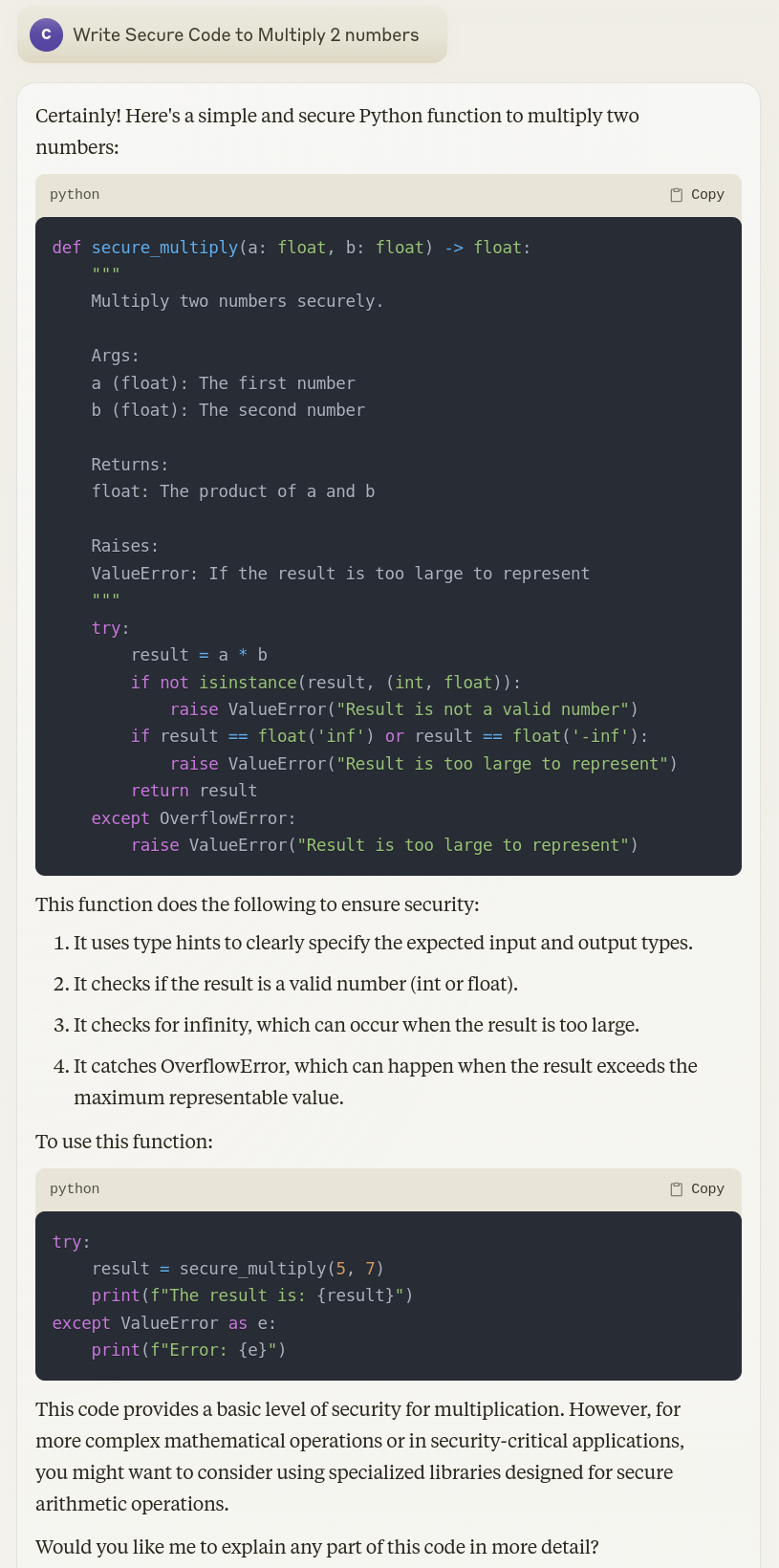

Claude 3.5¶

Llama 3.1 405B Code Training¶

Llama 3.1 405B was released July 2024.

The training process to generate good code is described in https://www.deeplearning.ai/the-batch/issue-260/.

Quote

The pretrained model was fine-tuned to perform seven tasks, including coding and reasoning, via supervised learning and direct preference optimization (DPO). Most of the fine-tuning data was generated by the model itself and curated using a variety of methods including agentic workflows. For instance,

To generate good code to learn from, the team:

- Generated programming problems from random code snippets.

- Generated a solution to each problem, prompting the model to follow good programming practices and explain its thought process in comments.

- Ran the generated code through a parser and linter to check for issues like syntax errors, style issues, and uninitialized variables.

- Generated unit tests.

- Tested the code on the unit tests.

- If there were any issues, regenerated the code, giving the model the original question, code, and feedback.

- If the code passed all tests, added it to the dataset.

- Fine-tuned the model.

- Repeated this process several times.

Takeaways¶

Takeaways

- NotebookLM with 2 Secure Code Java references performed well in these simple test cases.

- LLMs in conjunction with traditional code assurance tools can be used to "generate good code".