LLMs for CyberSecurity¶

LLMs for CyberSecurity Users and Use Cases¶

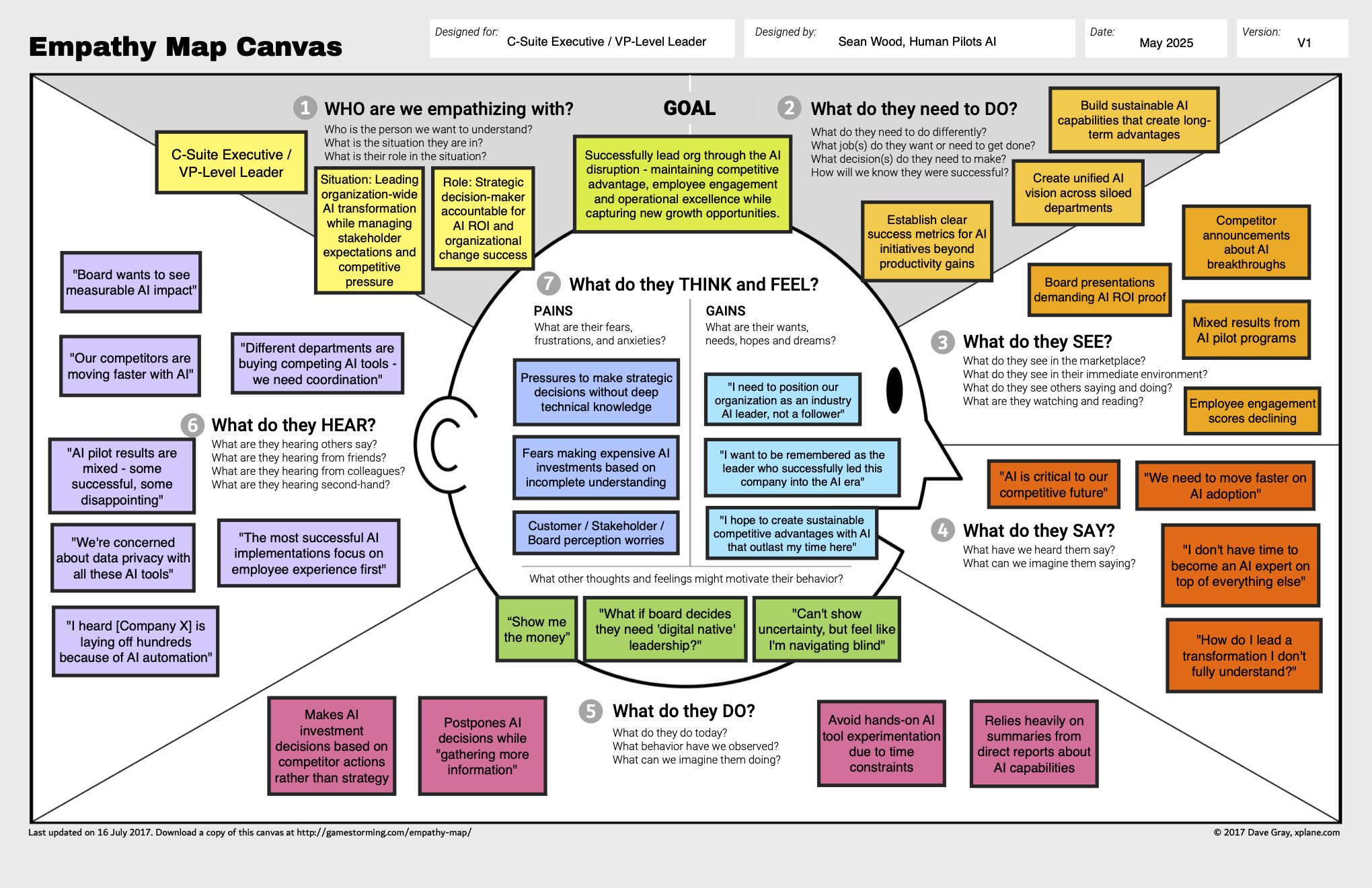

Image from Generative AI and Large Language Models for Cyber Security: All Insights You Need.

Empathy Map¶

See Original Post.

Tip

See also MITRE’s Innovation Toolkit https://itk.mitre.org/toolkit/tools-at-a-glance/ a collection of proven and repeatable problem-solving methods to help you and your team do something different that makes a difference.

Targeted PreMortem for Trustworthy AI¶

In general, it is good practice to start with the end in mind ala "Destination Postcard" from the book Switch, Dan and Chip Heath which looks at the aspirational positive outcomes.

This is also useful for Premortems to proactively identify failures so they can be avoided, to ensure the positive outcomes.

Quote

The Targeted Premortem (TPM) is a variant of Klein's Premortem Technique, which uses prospective hindsight to proactively identify failures. This variant targets brainstorming on reasons for losing trust in AI in the context of the sociotechnical system into which it is integrated. That is, the prompts are targeted to specific evidence-based focus areas where trust has been lost in AI. This tool comes with instructions, brainstorming prompts, and additional guidance on how to analyze the outcomes of a TPM session with users, developers, and other stakeholders.

References¶

LLMs for CyberSecurity References¶

- Generative AI and Large Language Models for Cyber Security: All Insights You Need, May 2024

- A Comprehensive Review of Large Language Models in Cyber Security, September 2024

- Large Language Models in Cybersecurity: State-of-the-Art, January 2024

- How Large Language Models Are Reshaping the Cybersecurity Landscape | Global AI Symposium talk, September 2024

- Large Language Models for Cyber Security: A Systematic Literature Review, July 2024

- Using AI for Offensive Security, June 2024

Agents for CyberSecurity References¶

- Blueprint for AI Agents in Cybersecurity - Leveraging AI Agents to Evolve Cybersecurity Practices

- Building AI Agents: Lessons Learned over the past Year

Comparing LLMs¶

There are several sites that allow comparisons of LLMs e.g.

- https://winston-bosan.github.io/llm-pareto-frontier/

- LLM Arena Pareto Frontier: Performance vs Cost

- https://artificialanalysis.ai/

- Independent analysis of AI models and API providers. Understand the AI landscape to choose the best model and provider for your use-case

- https://llmpricecheck.com/

- Compare and calculate the latest prices for LLM (Large Language Models) APIs from leading providers such as OpenAI GPT-4, Anthropic Claude, Google Gemini, Mate Llama 3, and more. Use our streamlined LLM Price Check tool to start optimizing your AI budget efficiently today!

- https://openrouter.ai/rankings?view=day

- Compare models used via OpenRouter

- https://github.com/vectara/hallucination-leaderboard

- LLM Hallucination Rate leaderboard

- https://lmarena.ai/?leaderboard

- Chatbot Arena is an open platform for crowdsourced AI benchmarking

- https://aider.chat/docs/leaderboards/

- Benchmark to evaluate an LLM’s ability to follow instructions and edit code successfully without human intervention

- https://huggingface.co/spaces/TIGER-Lab/MMLU-Pro

- Benchmark to evaluate language understanding models across broader and more challenging tasks

See also Economics of LLMs: Evaluations vs Pricing - Looking at which model to use for which task

Books¶

- Build a Large Language Model (from Scratch) by Sebastian Raschka, PhD

- LLM Engineer's Handbook by Paul Iusztin and Maxime Labonne

- AI Engineering by Chip Huyen

- Hands-On Large Language Models: Language Understanding and Generation, Oct 2024, Jay Alammar and Maarten Grootendorst

- Building LLMs for Production: Enhancing LLM Abilities and Reliability with Prompting, Fine-Tuning, and RAG, October 2024, Louis-Francois Bouchard and Louie Peters

- LLMs in Production From language models to successful products, December 2024, Christopher Brousseau and Matthew Sharp

- Fundamentals of Secure AI Systems with Personal Data, June 2025, Enrico Glerean